Learning with Association Rules using Python

The learning with association rules we see it applied mainly in the recommendation systems, as in the case where we are shown that the people who bought this product also bought this one .. or those who saw such a movie also recommend these others, etc.

For this, the algorithm a priori is one of the most used in this topic and allows to find efficiently sets of frequent items, which are the basis for generating association rules between the items.

First identify the items frequent datasets within the data set and then extend it to a larger set as long as those data sets appear consistently and frequently in accordance with a threshold settled down.

The algorithm is applied mainly in the analysis of commercial transactions and prediction problems. That is why the algorithm is designed to work with databases that contain transactions such as products or items purchased by consumers, or details about visits to a website, etc.

The way to generate association rules It consists of two steps:

- Generation of frequent combinations: whose objective is to find those sets that are frequent in the database. To determine the frequency, a threshold is established.

- Generation of rules: Based on the frequent sets, the rules are created based on the ordering of an index that establishes the groups of items or frequent products.

The index for the generation of combinations is called support and the index for generating rules is called confidence.

Algorithm

- Step 1. The minimum values for support and confidentiality are established

- Step 2. All subsets of transactions that have a support greater than the minimum support value are taken.

- Step 3. Take all the rules of these subsets that have a confidence greater than the minimum confidence value.

- Step 4. Order the rules in a decreasing way based on the value of the lift.

Si quieres ver el tema en video, checalo aquí y suscribete al canal en Youtube.

Example

If we have a set of 5 transactions with different products in each of them according to the following table

| 1 | Bread, milk, diapers |

| 2 | Bread, diapers, beer, egg |

| 3 | Milk, diapers, beer, soda, coffee |

| 4 | Bread, milk, diapers, beer |

| 5 | Bread, soda, milk, diapers |

The first step is to generate the frequent compilations, and, if we want more than 50% support, then we count the frequency of each of the articles, that is, in how many transactions each of the articles appear.

| Article | Transactions |

| Beer | 3 |

| Bread | 4 |

| Soda | 2 |

| Diapers | 5 |

| Milk | 4 |

| Egg | 1 |

| Coffee | 1 |

To calculate the support of each article, we divide the number of transactions of each article, among the total of transactions. That is, for beer we have that appears in 3 of the 5 transactions, then it is 3/5 = 0.6 which represents 60%. For the rest of the articles we have the following:

| Article | Support |

| Beer | 60% |

| Bread | 80% |

| Soda | 40% |

| Diapers | 100% |

| Milk | 80% |

| Egg | 20% |

| Coffee | 20% |

Since more than 50% support is required, we eliminate all items below this threshold: refreshment, egg and coffee.

The next step is to generate the combinations with the products that were left to iterate first with combinations of two, calculate the support and then with combinations of 3 and so on.

| Sets | Frequency | Support |

| Beer, Bread | 2 | 40% |

| Beer, Diapers | 3 | 60% |

| Beer, Milk | 2 | 40% |

| Bread, diapers | 4 | 80% |

| Bread, Milk | 3 | 60% |

| Diapers, Milk | 4 | 80% |

We eliminate those that are below 50% and we are left with the first frequent sets whose support is higher than 50%

| Beer, Diapers |

| Bread, diapers |

| Bread, Milk |

| Diapers, Milk |

From the generated sets, we create sets of three articles and calculate their support

| Sets | Frequency | Support |

| Beer, Diapers, Bread | 2 | 40% |

| Beer, diapers, milk | 2 | 40% |

| Bread, diapers, milk | 3 | 60% |

| Bread, Milk, Beer | 1 | 20% |

In these combinations of three, we only have the set consisting of Bread, Diapers and Milk, which we use to make combinations of 4 items, however for this case, they have 20% support so, here ends the argorithm.

The result showed an element of 3 articles and four of 2 articles:

| Bread, diapers, milk |

| Beer, Diapers |

| Bread, diapers |

| Bread, Milk |

| Diapers, Milk |

From these 5 sets we obtain the association rules, for which we establish that we also want a higher index 50%. This index is the confidence and we calculate it dividing the repetitions of the observations of the set between the repetitions of the rule:

Taking the first set of Bread, Diapers, Milk, the possible rules are:

- Bread => Diapers, Milk

- Diapers => Bread, Milk

- Milk = Bread, Diapers

- Bread, diapers => Milk

- Bread, Milk => Diapers

- Milk, Diapers => Bread

If we take the first rule: Pan => Diapers, Milk we observe that in the original transactions that Bread, diapers, milk appears in 3 transactions and the Pan rule appears in 4 transactions, so the confidence is 3/4 = 0.75, which is 75%

For the rule formed by: Bread, Diapers => Milk we have the combination Bread, Diapers, Milk appears in 3 transactions and the rule Diapers, Milk in 4 transactions so your confidence is 75% too, that is 3/4 = 0.75

Once we calculate the confidence of all the rules, we order them from highest to lowest based on that calculated confidence and we obtain the association rules for the whole set, which is how the algorithm works A priori.

A Priori with Python

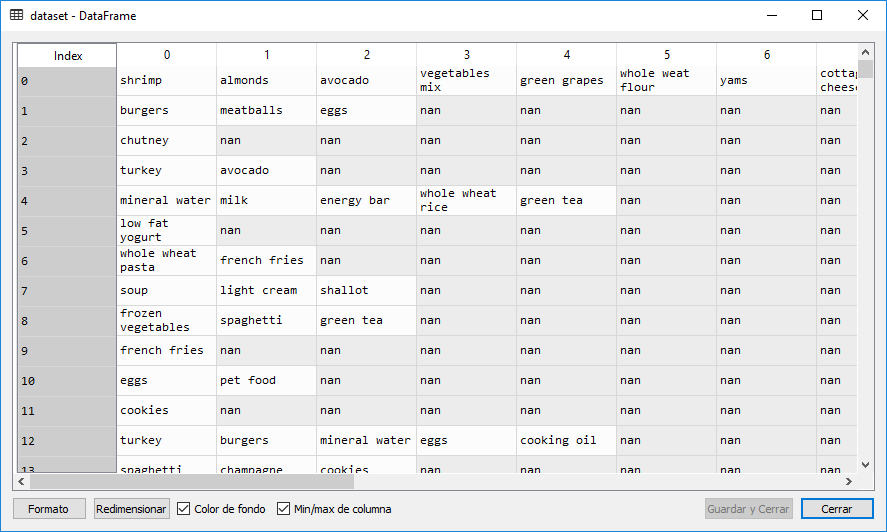

For the example with python We will use a business transaction data set called: Market_Basket_Optimisation.csv with 7,501 records or transactions, each of which contains one or more products of a supermarket:

We observe the resulting rules with 2, 3 or more items that imply another group of products and we also have the support, the confidence and the lift.

Apriori Class

The a priori class used in the previous implementation is the following:

Both files must be in the same folder in order to use the class in the script that creates the association rules.

Are Python an insurance that has already been associated?

No at all

what is the Association Rules?

Association rules are used to discover facts that occur in common within a given dataset. The relation among variables in big datasets. In sales, for example, the probability that a customer who buy product A, also includes Product B